If you’re just finding this post, please check out Erik Marsja’s post describing the same functionality in well-maintained python software that wasn’t available when I originally wrote this post.

I love doing data analyses with pandas, numpy, sci-py etc., but I often need to run repeated measures ANOVAs, which are not implemented in any major python libraries. Python Psychologist shows how to do repeated measures ANOVAs yourself in python, but I find using a widley distributed implementation comforting…

In this post I show how to execute a repeated measures ANOVAs using the rpy2 library, which allows us to move data between python and R, and execute R commands from python. I use rpy2 to load the car library and run the ANOVA.

I will show how to run a one-way repeated measures ANOVA and a two-way repeated measures ANOVA.

1 2 3 4 5 6 7 8 9 10 | |

Below I use the random library to generate some fake data. I seed the random number generator with a one so that this analysis can be replicated.

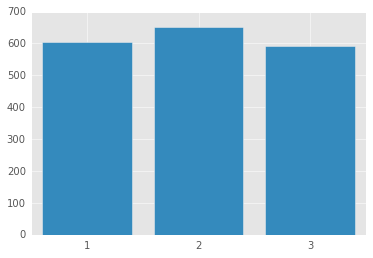

I will generated 3 conditions which represent 3 levels of a single variable.

The data are generated from a gaussian distribution. The second condition has a higher mean than the other two conditions.

1 2 3 4 5 6 7 8 9 | |

Next, I load rpy2 for ipython. I am doing these analyses with ipython in a jupyter notebook (highly recommended).

1

| |

Here’s how to run the ANOVA. Note that this is a one-way anova with 3 levels of the factor.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

Type III Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

cond_1 1

cond_2 1

cond_3 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 102473990

Sum of squares and products for error:

(Intercept)

(Intercept) 78712.7

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.9992 37754.33 1 29 < 2.22e-16 ***

Wilks 1 0.0008 37754.33 1 29 < 2.22e-16 ***

Hotelling-Lawley 1 1301.8736 37754.33 1 29 < 2.22e-16 ***

Roy 1 1301.8736 37754.33 1 29 < 2.22e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

------------------------------------------

Term: Factor

Response transformation matrix:

Factor1 Factor2

cond_1 1 0

cond_2 0 1

cond_3 -1 -1

Sum of squares and products for the hypothesis:

Factor1 Factor2

Factor1 3679.584 19750.87

Factor2 19750.870 106016.58

Sum of squares and products for error:

Factor1 Factor2

Factor1 40463.19 27139.59

Factor2 27139.59 51733.12

Multivariate Tests: Factor

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.7152596 35.16759 2 28 2.303e-08 ***

Wilks 1 0.2847404 35.16759 2 28 2.303e-08 ***

Hotelling-Lawley 1 2.5119704 35.16759 2 28 2.303e-08 ***

Roy 1 2.5119704 35.16759 2 28 2.303e-08 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Univariate Type III Repeated-Measures ANOVA Assuming Sphericity

SS num Df Error SS den Df F Pr(>F)

(Intercept) 34157997 1 26238 29 37754.334 < 2.2e-16 ***

Factor 59964 2 43371 58 40.094 1.163e-11 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Mauchly Tests for Sphericity

Test statistic p-value

Factor 0.96168 0.57866

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

Factor 0.96309 2.595e-11 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

HF eps Pr(>F[HF])

Factor 1.03025 1.163294e-11

The ANOVA table isn’t pretty, but it works. As you can see, the ANOVA was wildly significant.

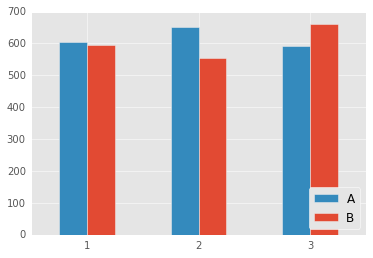

Next, I generate data for a two-way (2x3) repeated measures ANOVA. Condition A is the same data as above. Condition B has a different pattern (2 is lower than 1 and 3), which should produce an interaction.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Type III Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

cond_1a 1

cond_2a 1

cond_3a 1

cond_1b 1

cond_2b 1

cond_3b 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 401981075

Sum of squares and products for error:

(Intercept)

(Intercept) 185650.5

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.9995 62792.47 1 29 < 2.22e-16 ***

Wilks 1 0.0005 62792.47 1 29 < 2.22e-16 ***

Hotelling-Lawley 1 2165.2575 62792.47 1 29 < 2.22e-16 ***

Roy 1 2165.2575 62792.47 1 29 < 2.22e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

------------------------------------------

Term: Factor1

Response transformation matrix:

Factor11

cond_1a 1

cond_2a 1

cond_3a 1

cond_1b -1

cond_2b -1

cond_3b -1

Sum of squares and products for the hypothesis:

Factor11

Factor11 38581.51

Sum of squares and products for error:

Factor11

Factor11 142762.3

Multivariate Tests: Factor1

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.2127533 7.837247 1 29 0.0090091 **

Wilks 1 0.7872467 7.837247 1 29 0.0090091 **

Hotelling-Lawley 1 0.2702499 7.837247 1 29 0.0090091 **

Roy 1 0.2702499 7.837247 1 29 0.0090091 **

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

------------------------------------------

Term: Factor2

Response transformation matrix:

Factor21 Factor22

cond_1a 1 0

cond_2a 0 1

cond_3a -1 -1

cond_1b 1 0

cond_2b 0 1

cond_3b -1 -1

Sum of squares and products for the hypothesis:

Factor21 Factor22

Factor21 91480.01 77568.78

Factor22 77568.78 65773.02

Sum of squares and products for error:

Factor21 Factor22

Factor21 90374.60 56539.06

Factor22 56539.06 87589.85

Multivariate Tests: Factor2

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.5235423 15.38351 2 28 3.107e-05 ***

Wilks 1 0.4764577 15.38351 2 28 3.107e-05 ***

Hotelling-Lawley 1 1.0988223 15.38351 2 28 3.107e-05 ***

Roy 1 1.0988223 15.38351 2 28 3.107e-05 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

------------------------------------------

Term: Factor1:Factor2

Response transformation matrix:

Factor11:Factor21 Factor11:Factor22

cond_1a 1 0

cond_2a 0 1

cond_3a -1 -1

cond_1b -1 0

cond_2b 0 -1

cond_3b 1 1

Sum of squares and products for the hypothesis:

Factor11:Factor21 Factor11:Factor22

Factor11:Factor21 179585.9 384647

Factor11:Factor22 384647.0 823858

Sum of squares and products for error:

Factor11:Factor21 Factor11:Factor22

Factor11:Factor21 92445.33 45639.49

Factor11:Factor22 45639.49 89940.37

Multivariate Tests: Factor1:Factor2

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.901764 128.5145 2 28 7.7941e-15 ***

Wilks 1 0.098236 128.5145 2 28 7.7941e-15 ***

Hotelling-Lawley 1 9.179605 128.5145 2 28 7.7941e-15 ***

Roy 1 9.179605 128.5145 2 28 7.7941e-15 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Univariate Type III Repeated-Measures ANOVA Assuming Sphericity

SS num Df Error SS den Df F Pr(>F)

(Intercept) 66996846 1 30942 29 62792.4662 < 2.2e-16 ***

Factor1 6430 1 23794 29 7.8372 0.009009 **

Factor2 26561 2 40475 58 19.0310 4.42e-07 ***

Factor1:Factor2 206266 2 45582 58 131.2293 < 2.2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Mauchly Tests for Sphericity

Test statistic p-value

Factor2 0.96023 0.56654

Factor1:Factor2 0.99975 0.99648

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

Factor2 0.96175 6.876e-07 ***

Factor1:Factor2 0.99975 < 2.2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

HF eps Pr(>F[HF])

Factor2 1.028657 4.420005e-07

Factor1:Factor2 1.073774 2.965002e-22

Again, the anova table isn’t too pretty.

This obviously isn’t the most exciting post in the world, but its a nice bit of code to have in your back pocket if you’re doing experimental analyses in python.